In the second half of the twentieth century, in the works of philosophers, mathematicians, engineers, and social scientists, the faculty of reason was radically reconceived. In the models of game theory, decision theory, artificial intelligence, and military strategy, the algorithmic rules of rationality replaced the self-critical judgments of reason. The reverberations of this shift from reason to rationality still echo in contemporary debates over human nature, planning and policy, and, especially, the direction of the human sciences.

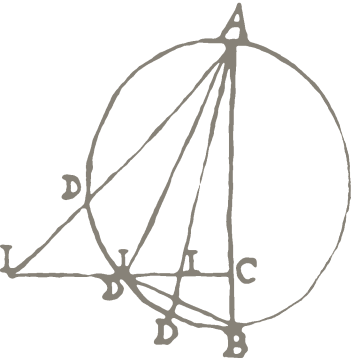

The background to this transformation lies in the history of rules. In major European languages from the early Middle Ages until the mid-nineteenth century, the chief sense of regula /rule/Regel/règle derived from Regula Sancti Benedicti (6th century CE) laid down for Christian monks and referred to a moral precept, a model or code of conduct for a way of life. This sense is still vivid in Enlightenment discussions of whether art should follow rules or whether it is (in Kant’s words) genius that “gives the rule [i.e., model] to art.” In the early decades of the nineteenth century, a tertiary meaning of rules as algorithms (first and foremost the rules of arithmetic) begins its rise to dominance, in contexts as diverse as the first effective calculating machines, the creation of national civil service bureaucracies, and attempts to guarantee the logical solidity of mathematical proofs.

By the early 1950s, the dream of reducing intelligence, decision-making, strategic planning, and reason itself to algorithmic rules had spread like wildfire to psychology, economics, political theory, sociology, and even philosophy. The story of how reason became rule-bound is one of Cold War ambitions, technological fantasies come true (especially in the realm of computers), quantification and quantiphrenia, and, above all, the eclipse of the faculty of judgment as an essential component of reason.