Since the late twentieth century, huge databases have become a ubiquitous feature of science, and Big Data has become a buzzword for describing an ostensibly new and distinctive mode of knowledge production. Some observers have even suggested that Big Data has introduced a new epistemology of science: one in which data-gathering and knowledge production phases are more explicitly separate than they have been in the past. It is vitally important not only to reconstruct a history of "data" in the longue durée (extending from the early modern period to the present), but also to critically examine historical claims about the distinctiveness of modern data practices and epistemologies.

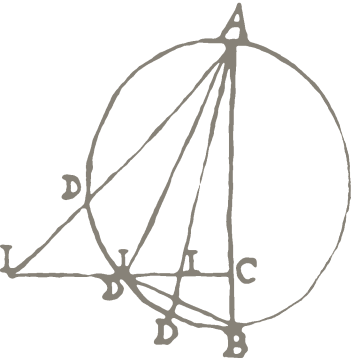

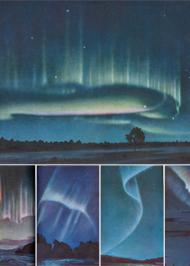

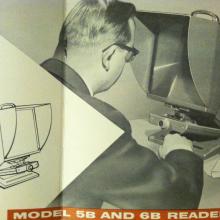

The central themes of this Working Group—the epistemology, practice, material culture, and political economy of data—are understood as overlapping, interrelated categories. Together they form the basic, necessary components for historicizing the emergence of modern data-driven science, but they are not meant to be explored in isolation. We take for granted, for example, that a history of data depends on an understanding of the material culture—the tools and technologies used to collect, store, and analyze data—that makes data-driven science possible. More than that, data is immanent to the practices and technologies that support it: not only are epistemologies of data embodied in tools and machines, but in a concrete sense data itself cannot exist apart from them. This precise relationship between technologies, practices, and epistemologies is complex. Big Data is often, for example, associated with the era of computer databases, but this association potentially overlooks important continuities with data practices stretching back to the eighteenth century and earlier. The very notion of size—of "bigness"—is also contingent on historical factors that need to be contextualized and problematized. We are therefore interested in exploring the material cultures and practices of data in a broad historical context, including the development of information processing technologies (whether paper-based or mechanical), and also in historicizing the relationships between collections of physical objects and collections of data. Additionally, attention must be paid to visualizations and representations of data (graphs, images, printouts, etc.), both as working tools and also as means of communication.

In the era following the Second World War, new technologies have emerged that allow new kinds of data analysis and ever larger data production. In addition, a new cultural and political context has shaped and defined the meaning, significance, and politics of data-driven science in the Cold War and beyond. The term "Big Data" invokes the consequences of increasing economies of scale on many different levels. It ostensibly refers to the enormous amount of information collected, stored, and processed in fields as varied as genomics, climate science, paleontology, anthropology, and economics. But it also implicates a Cold War political economy, given that many of the precursors to twenty-first-century data sciences began as national security or military projects in the Big Science eras of the 1950s and 1960s. These political and cultural ramifications of data cannot be separated from the broader historical consideration of data-driven science.

Historicizing Big Data provides comparative breadth and historical depth to the ongoing discussion of the revolutionary potential of data-intensive modes of knowledge production and the challenges the current "data deluge" poses to society.