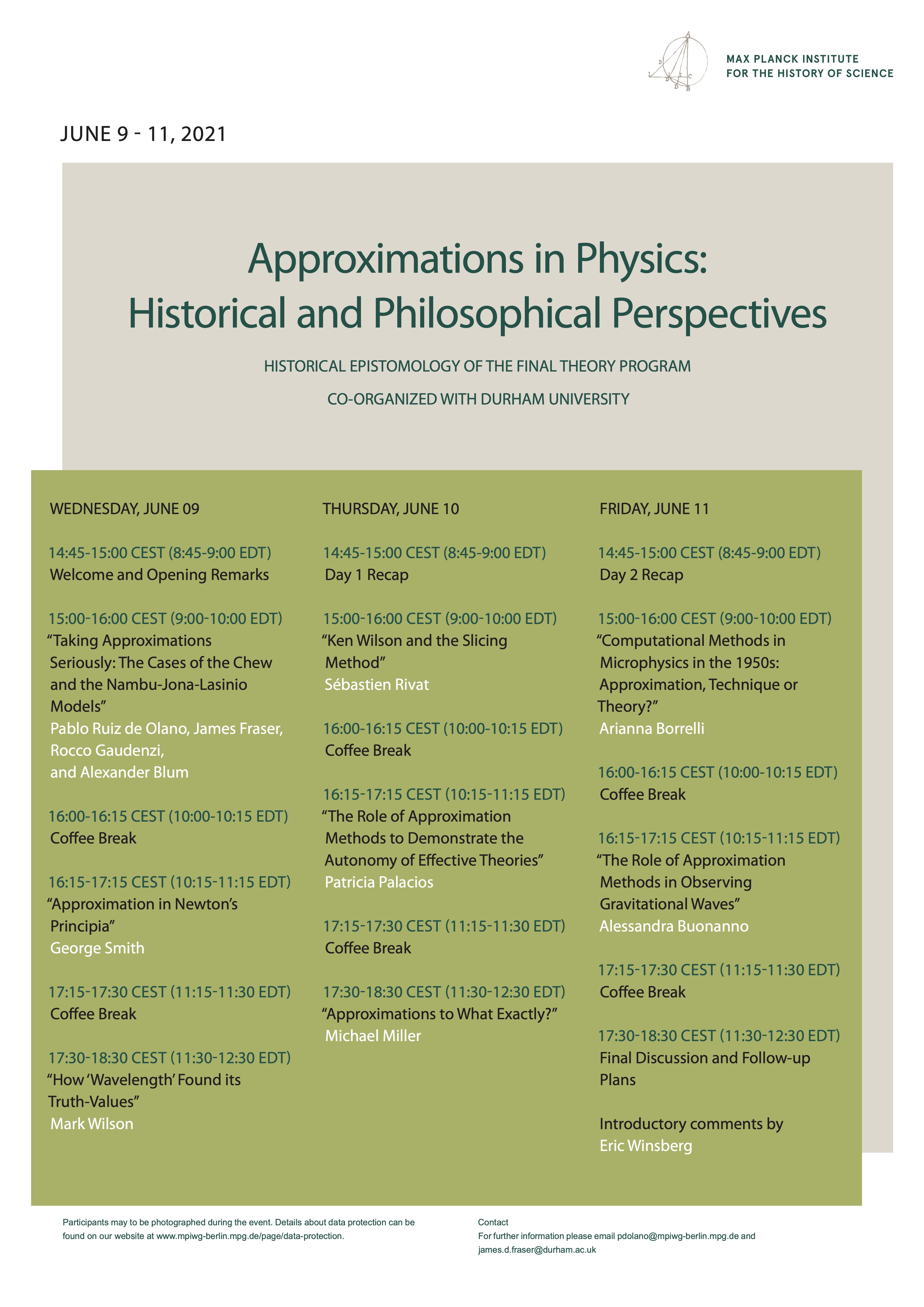

Jun 9-11, 2021

Approximations in Physics: Historical and Philosophical Perspectives

The purpose of the event will consist of investigating the different roles that approximation methods have played over the history of physics, from Newton to the present day. Our hope is that the workshop will allow us to shed light on our suspicion that approximation methods became more and more central to physics after WWII, and that this constituted an important break with established practice. Part of our goal in organizing the event, then, consists of finding out if current attempts to find a theory of everything in physics are better understood as attempts to develop new kinds of approximation methods, rather than as efforts to create new scientific theories in the usual sense.

Abstracts

-

Arianna Borrelli

Title: Computational methods in microphysics in the 1950s: approximation, technique or theory?

Abstract: From the 1950s onward electronic computers came to be employed in an increasing number of scientific fields, among them microphysics. One of the main functions of computing machines was to provide numerical estimates of quantities whose computation with other means was too complex or too lenghty. Typical examples of this use were the discretization of functions or integrals, or the employement of statistical sampling on a scale possible only thanks to electronic machines. In these as in other cases computational methods can in principle be regarded as providing approximations of theoretical quantities which are originally defined by analytical expressions. Moreover, when looking at the further development in microphysics and other scientific fields, one can certainly argue that computational methods took up an increasingly central role in (theoretical) research practices, for example with the use of modelling through computer simulations.

Can these developments be interpreted as a turn towards approximation methods due to problems in analytical computations which went beyond the issues faced by theorists of the previous centuries? I will explore this possibility with an analysis of the use of the Monte Carlo method in the study of particle resonances in the late 1950s and 1960s. At the time, no quantum field theory computations were possible for such phenomena, and some theorists derived phenomenological estimates using a simple model proposed by Enrico Fermi, eventually employing the Monte Carlo method to perform some calculations with it. The computer-aided numerical methods turned out to be so successful, that the model they were based was forgotten, and the Monte Carlo computations started de facto to function as a theoretical tool in their own right, and not just as the numerical application of an analytical theory.

However, these computational methods were hardly ever referred to as approximations, and the fact that they soon came to be employed without reference to the model they were based on seems to suggests that that notion is hardly fitting to describe them. Moreover, the fundamental difficulties in analytically modeling strong interactions played no role in the decision to use computer-aided methods. A more plausible explanation for the developments could be in terms of David Kaiser's thesis that, in the late 20th century, a broad array of computational techniques became increasingly important in fundamental physics at the expense of old-fashioned theories.

Alternatively, the historical episode sketched above could be seen in analogy to what happened in the early modern and modern period, when new material or symbolic means of computation were introduced into natural philosophy and natural science and eventually gained an epistemic independence. This was the case for the geometrical constructions of the heavenly sphere, astronomical clocks, or symbolic notation of analysis. In all these cases the computational tools eventually came to be seen by some authors as a representation of natural order. This view could be supported by looking at the introduction of computational methods in molecular dynamics in the 1950s. In that case, analytical theories existed and were quite unproblematic. Nonetheless, some physicists decided to use computational methods to explore the same phenomena that could be studied analytically, eventually obtaining results in contrast with analytical predictions.

-

Alessandra Buonanno

Title: The Role of Approximation Methods in Observing Gravitational Waves

Abstract: Gravitational-wave astronomy has become the new tool to explore the Universe. Observing gravitational waves and inferring astrophysical and fundamental physics information hinges on our ability to make precise theoretical predictions of the two-body dynamics and gravitational radiation. Because of the non-linear nature of Einstein’s equations, an exact solution of the relativistic two-body problem is not available. However, considerable progress has been made in the last century to provide analytical, approximate solutions. Furthermore, numerical solutions of the two-body dynamics and gravitational radiation are also available via supercomputers, albeit time consuming. I will review the main approximation methods, their key role in observing and interpreting gravitational waves from binary systems composed of black holes and neutron stars, and the synergistic approach that has successfully combined analytical and numerical results to provide highly accurate waveform models for LIGO and Virgo observations. Since Einstein did not conceive the theory of General Relativity starting from approximations to it, I will also discuss, in relation to gravitational waves, what we gain and what we lose of the original theory when investigating it through approximation methods.

-

Michael Miller

Title: Approximations to what exactly?

Abstract: Fraser (2020) argues that one of the core conceptual challenges facing perturbative quantum field theory is that it provides approximations without specifying an underlying model. According to his view, the significance of this observation is that it makes it difficult to provide a physical explanation of the empirical success of these approximations. In this talk, I address a number of aspects of Fraser's argument. In the absence of an underlying model, the claim that perturbative field theory provides approximations requires careful elaboration, because it is not clear what the approximations are supposed to be approximations to. I articulate a sense in which I think Fraser is correct to say that perturbative field theory generates approximations, and I consider what it would take to provide a physical explanation of the empirical success of approximations understood in this sense.

-

Patricia Palacios

Title: The role of approximation methods to demonstrate the autonomy of effective theories (tentative title)

Abstract: Robustness is the range of single-type token-level variation across which the invariance of the relevant phenomena can be established. On the other hand, universality is the range of inter-type variation across which the invariance of the phenomenon can be established (Gryb et al. 2020). Proving that a certain behavior is both robust and universal can be crucial to develop an autonomous description of the phenomenon that does not depend on micro-physical details and, at the same time, can be used to describe the same behavior in different types of systems. Indeed, establishing that a certain behavior is both robust and universal usually constitutes the first step towards the construction of an autonomous coarse-grained description of the system that “effectively“ captures everything that is physically relevant without having to take into account micro-physical details (e.g. ultra-short wavelengths, high energy effects) and that can be applied to different types of systems. The Wilsonian renormalization group (RG) approach has proven to be a successful approximation method for the construction of such autonomous coarse-grained descriptions, which assures a separation of scales. However, there are many cases in which renormalization group methods are not applicable, how shall we warrant the autonomy of coarse-grained (low-energy) descriptions in those cases? In other words, what are the essential properties of RG methods that allow one to warrant the autonomy of coarse-grained descriptions? In this contribution, I will explore this question by focusing on the semi-classical treatment of black holes and the attempt of solving the so-called ”trans-Planckian problem.“

-

Sebastien Rivat

Title: Ken Wilson and the slicing method

Abstract: Kenneth Wilson is a central figure in the history of the renormalization group (RG) and effective field theory (EFT) programs. In this talk, I will retrace in some details the steps that led him to design a new kind of approximate theory---his early prototype of effective theory---out of a new kind of approximation method, the so-called “slicing method”. Wilson had been concerned with developing non-perturbative methods to understand the structure of realistic field theories since the late 1950s. In 1965, and before his encounter with condensed matter physics, he eventually came to divide the state space of a simple meson-nucleon model into a set of well-separated continuous slices and formulate for the first time a sequence of effective models at different energy scales (as well as a set of equations relating their coupling). Using standard non-relativistic perturbative methods as a reference point, I will clarify the sense in which Wilson’s slicing method and first prototype of effective theory involve “approximations” and argue that what really made the difference in 1965 was his algorithmic outlook on physical problems. If time allows, I will conclude with a few remarks about the role of approximation methods in theory-construction.

-

Pablo Ruiz De Olano, James Fraser, Rocco Gaudenzi, and Alexander Blum

Title: Taking Approximations Seriously: The Cases of the Chew and the Nambu-Jona-Lasinio Models

Abstract: Philosophers of science have typically made use of the familiar notions of a scientific theory and a scientific model in order to make sense of science. These two concepts, therefore, have guided their analyses of the manner in which science operates, and of the kinds of products that it gives rise to. In this paper, we will argue for the introduction of a third kind of analytic category that ought to be embraced to allow for a more complete understanding of the scientific endeavor. This is the notion of an approximation method. Our claim is limited to the case of high-energy physics and it relies on a historical argument. More precisely, we examine the genesis of two models that played a crucial role in the development of the discipline during the 1950s and the 1960s: the Chew and the Nambu-Jona-Lasinio models. As we show, both models arose as a combination of an already existing Hamiltonian with a new approximation method. The new approximation methods developed by Chew and by Nambu and Jona-Lasinio, furthermore, constituted the key ingredient behind the success of the two models. These two historical episodes, then, cannot be understood without paying attention to the crucial role that approximations played in them, and we contend that the same is true of the history of high-energy physics more generally. This, in our eyes, justifies the adoption of the concept of an approximation method as an independent unit of analysis in history and philosophy of physics---separate from the already available notions of a scientific theory and a scientific model. We conclude our talk with a discussion of the new kinds of questions that this maneuver allows us to formulate, and the new light that it sheds on the history of physics.

-

George Smith

Title: Approximation in Newton’s Principia

Abstract: From the very beginning of his efforts toward the Principia Newton had concluded that any representation of orbital motions in our planetary system can only be an approximation to the actual motions – not just because of the limits of precision of observations, but far more so because the actual motions are too complicated ever to admit to exact representation. Much of the Principia can be read as endeavoring to show that one particular approach to approximating the motions, namely as arising from inverse-square gravitational forces directed toward the bodies in the system, is to be preferred on physical grounds to other comparably accurate approximations. To do this he resorts to a number of constraints on approximations, most notably: (1) requiring all inferences of forces from observed motions to be licensed by propositions of an “if quam proximè, then quam proximè” form; (2) requiring astronomers’ approximations of the orbital motions referred to a central body (e.g. the sun) instead of the common center of gravity of the system to hold in an asymptotic limit; and (3) requiring corrections to these limit-approximations derived from perturbing forces to account for known systematic deviations from them. The talk will cover Newton’s attempts to meet these requirements and how his approach to the third fell short of the perturbation methods of subsequent celestial mechanics – ending with a characterization of how the latter ultimately yielded much stronger evidence than is generally appreciated.

-

Mark Wilson

Title: How "Wavelength" Found its Truth-Values

Abstract: In this talk I'll outline some of the surprising considerations that have shifted asymptotic considerations out of the pool of the merely "approximative" into that of the "fundamental" in modern classical optics.

Contact and Registration

This event is open to all. Please contact Pablo Ruiz de Olano for further information.